Interface to a Technical System, Multi-sensory Interaction and for the Generation of Emotion, Safety and Trust

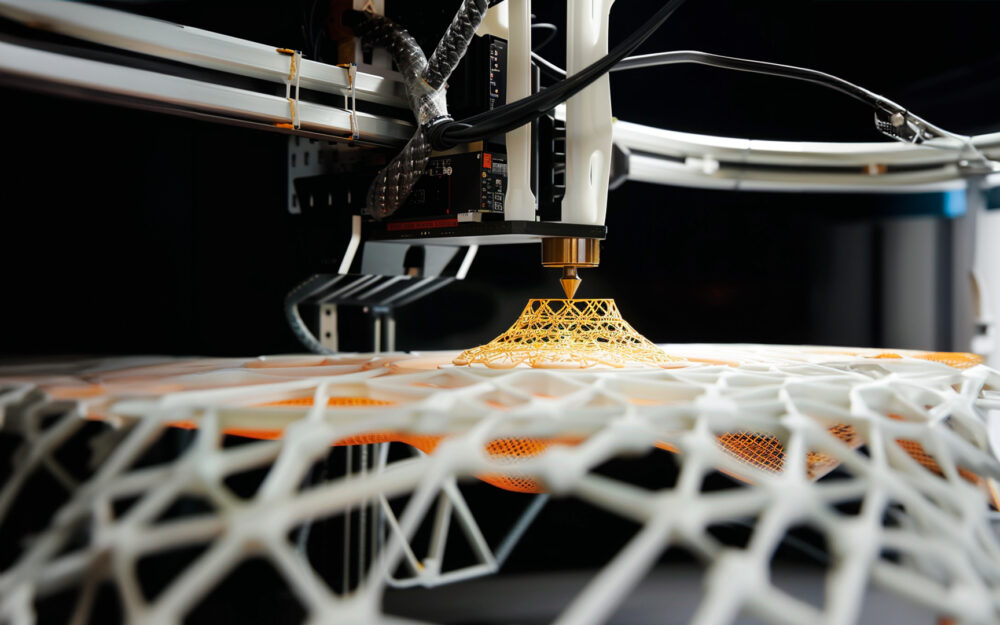

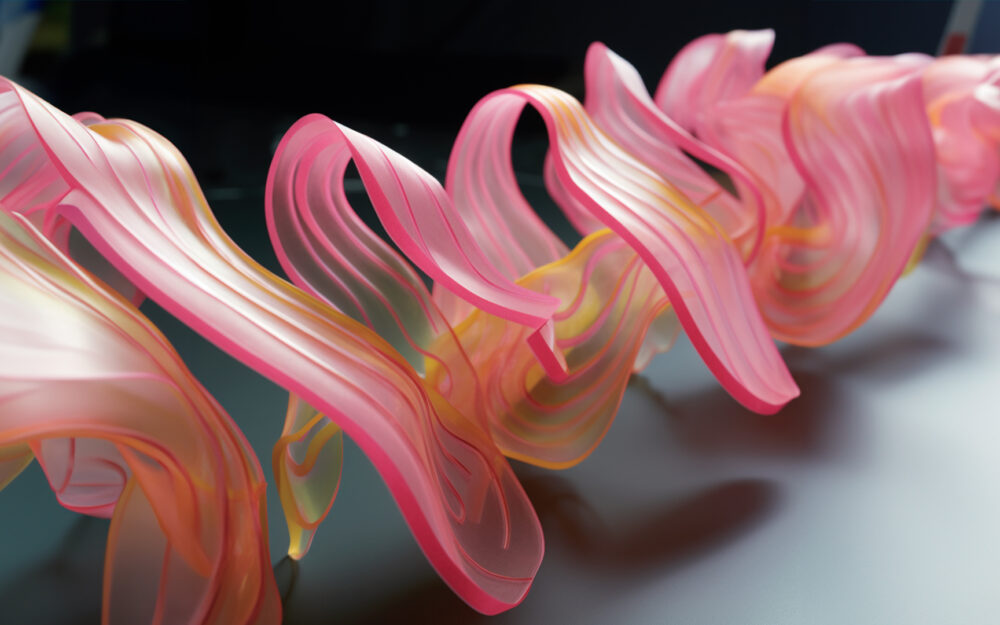

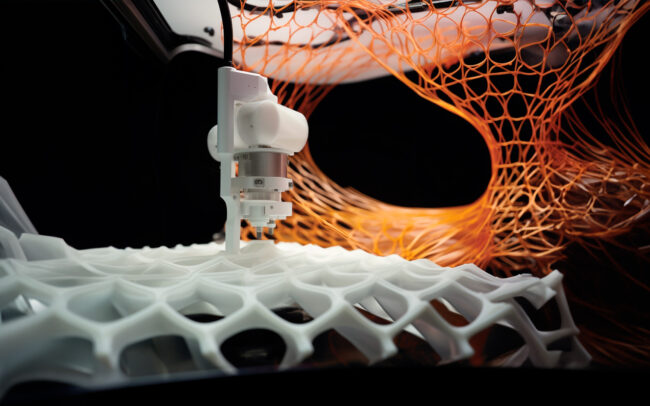

The transdisciplinary project “Autonomous Materials: Interface to a Technical System, Multi-Sensory Interaction and for the Generation of Emotion, Safety and Trust” deals with the production of shape-changing, dynamic and smart materials for intuitive, multi-sensory interactions, with a focus on functional surfaces and textiles in a spatial context. A novelty is the cooperative, transdisciplinary development of a holistic composite of material and HMI, combining expertise from material design, physical HMI and 3D printing technology (FGF) for the new development and large-scale production of smart, textile-based materials for bodily material interactions. Over the course of the project, geometries, topologies and large-volume smart material systems with various integration levels of interaction, dynamics and function, with different applications and operating options, will be developed and their transfer options prepared in cooperation with the experts. The study-based evaluation is used for verification and quantification in order to design transformative materials and surfaces that serve as haptic interfaces to promote an emotional connection between the user and the underlying product/technology.